published 2024-09-22

I was introduced to Tim Harford's Cautionary Tales by means of Roman Mars' 99% Invisible. The nested episode was Episode 3 - LaLa Land: Galileo’s Warning. He covers the LaLa Land / Moonlight mix-up at the Oscars in 2017, and how the "safeguards" in the system used to deliver the envelopes with the winners actually caused more issues than saving the day. Give it a listen, it's only 30 minutes long and absolutely fascinating. He covers the core concepts of all the rambling below.

To supplement the show, also see this article by Benjamin Bannister, who proposes a major design change on the cards themselves could have helped clarify and prevent the error, even if still given the wrong card.

...or watch the video Vox made about it, along with other excellent examples of how design can make our lives better (voting and perscription pill bottles!)

The book used to explain the mechanics of that night was Normal Accidents: Living with High-Risk Technologies, by Charles Perrow. Charles was inspired to investigate complex and potentially catastrophic systems after the Three Mile Island accident. His main argument was that in a system that is both highly complex and tightly coupled, failure is inevitable.

You can also read Tim's article on Normal Accidents pre-Oscar fiasco here.

Below are key concepts from the book.

···

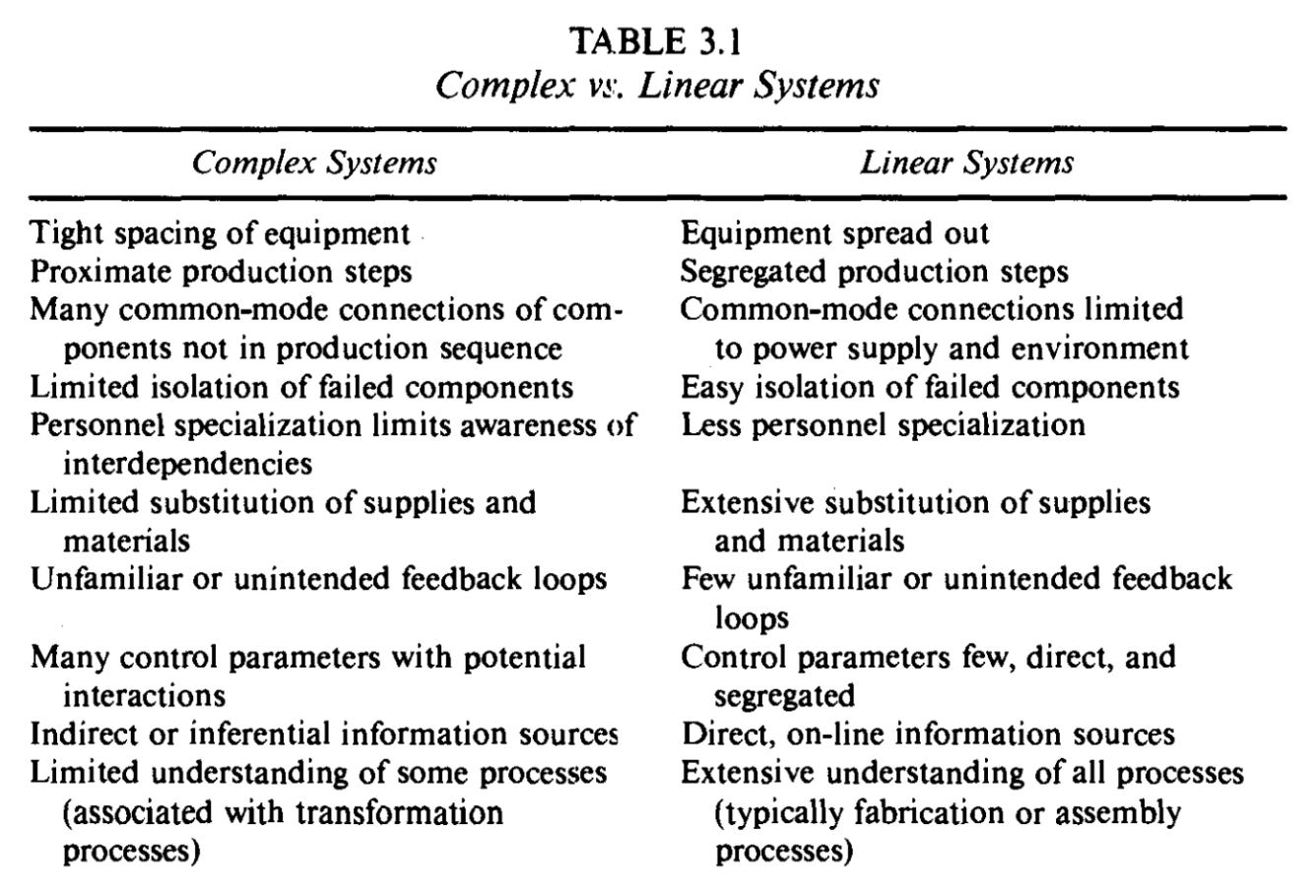

There are two kinds of interactions

Complex - when one component can interact with one or more outside of the normal production sequence, either by design or not. They are unfamiliar, unplanned, or unexpected. They are also not visible or immediately comprehensible.

Linear - interactions of one component in DEPOSE (Design, Equipment, Procedures, Operations, Supplies/materials, and Environment) with one or more components that proceed or follow it immediately in the sequence of production. They are familiar and expected in the production/maintenance sequence.

Even if the ineractions unplanned, they are quite visible.

To explain the immediate effects one component has on another (or more), Charles describes the levels of coupling

Tight Coupling - a mechanical term meaning there is no slack or buffer between two things. What happens in one directly affects what happens in the other

Loose Coupling =/= inefficient. It allows certain parts of the system to express themselves according to their own logic or interests processing delays possible

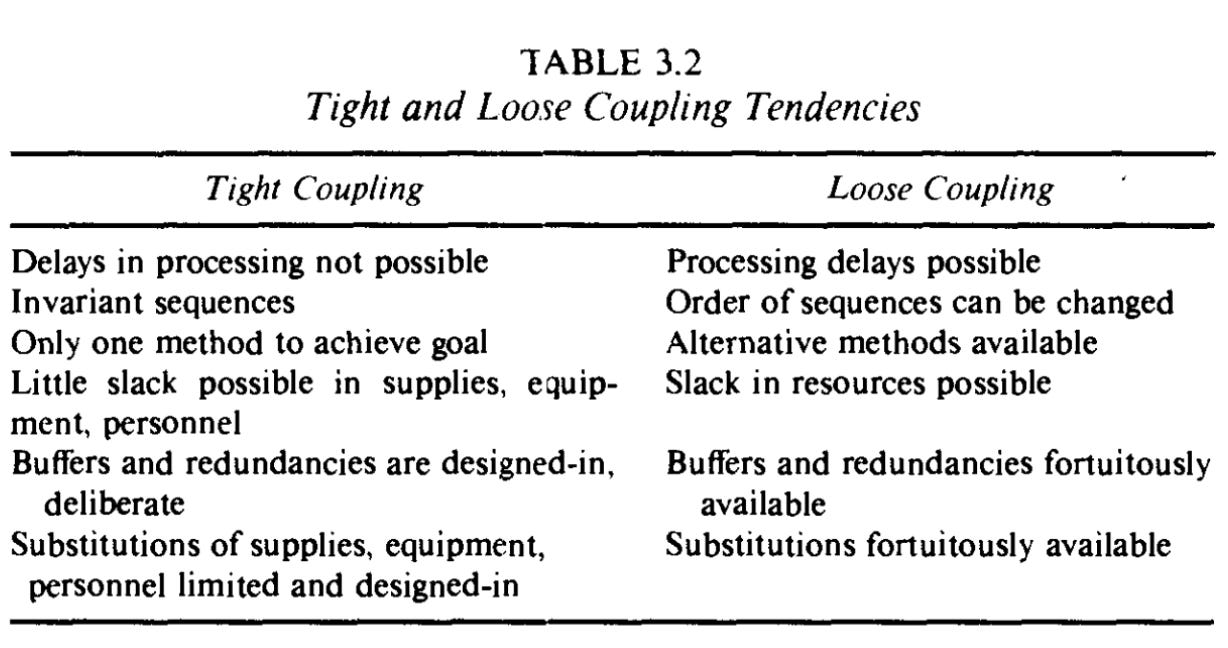

He then plots how various industries, companies, and organizations land on these interactions. The upper-right quadrant posing the greatest potential risk given complexity, tight coupling, and catastrophic potential.

"When societies confront a new or explosively growing evil, the number of risk assesors probably grows. Whether they are shamans or scientists ... their function is not only to inform and advise the masters of these systems about the risks and benefits, but also, should the risk be taken, to legitimize and reassure the subjects."

Charles Perrow, Normal Accicents, page 307

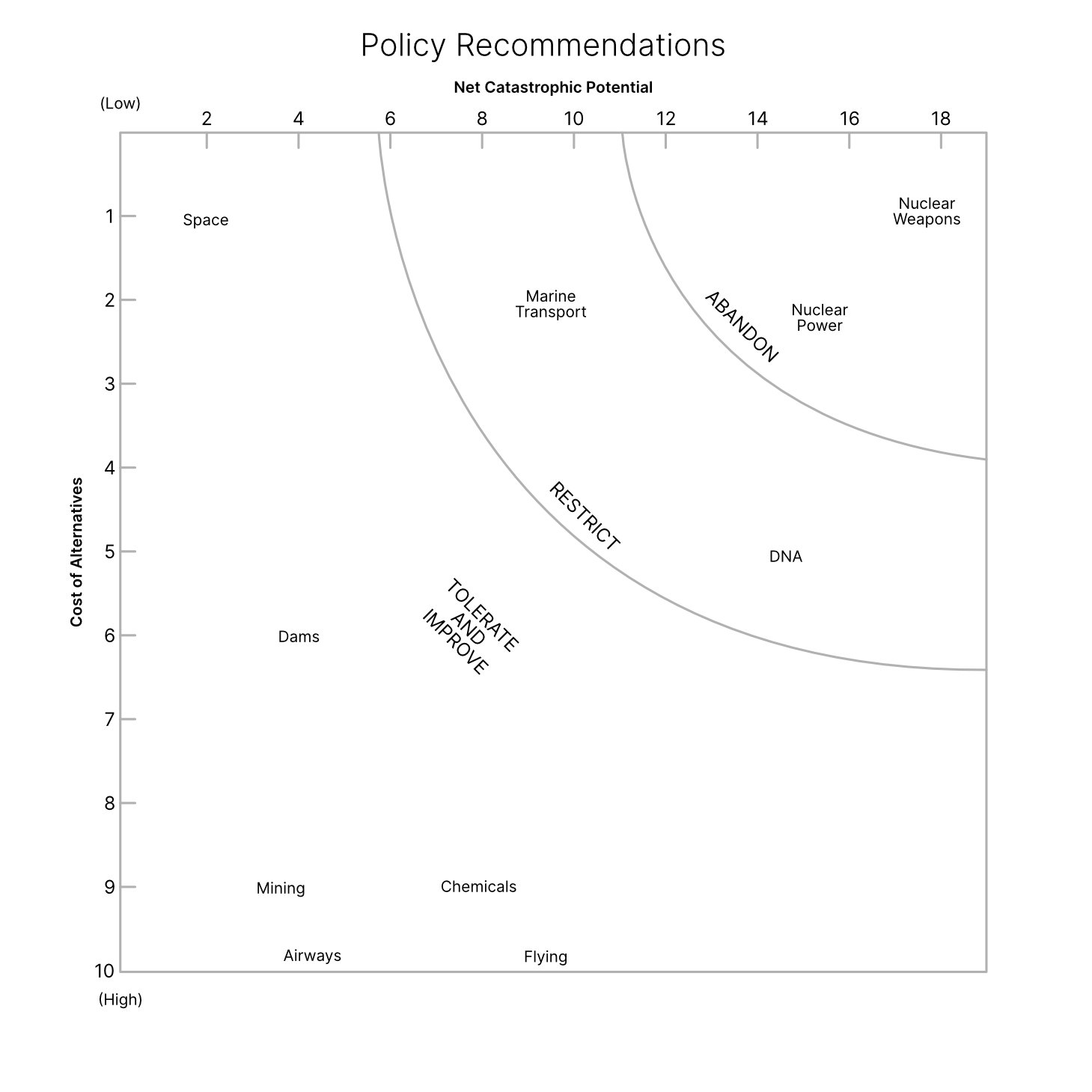

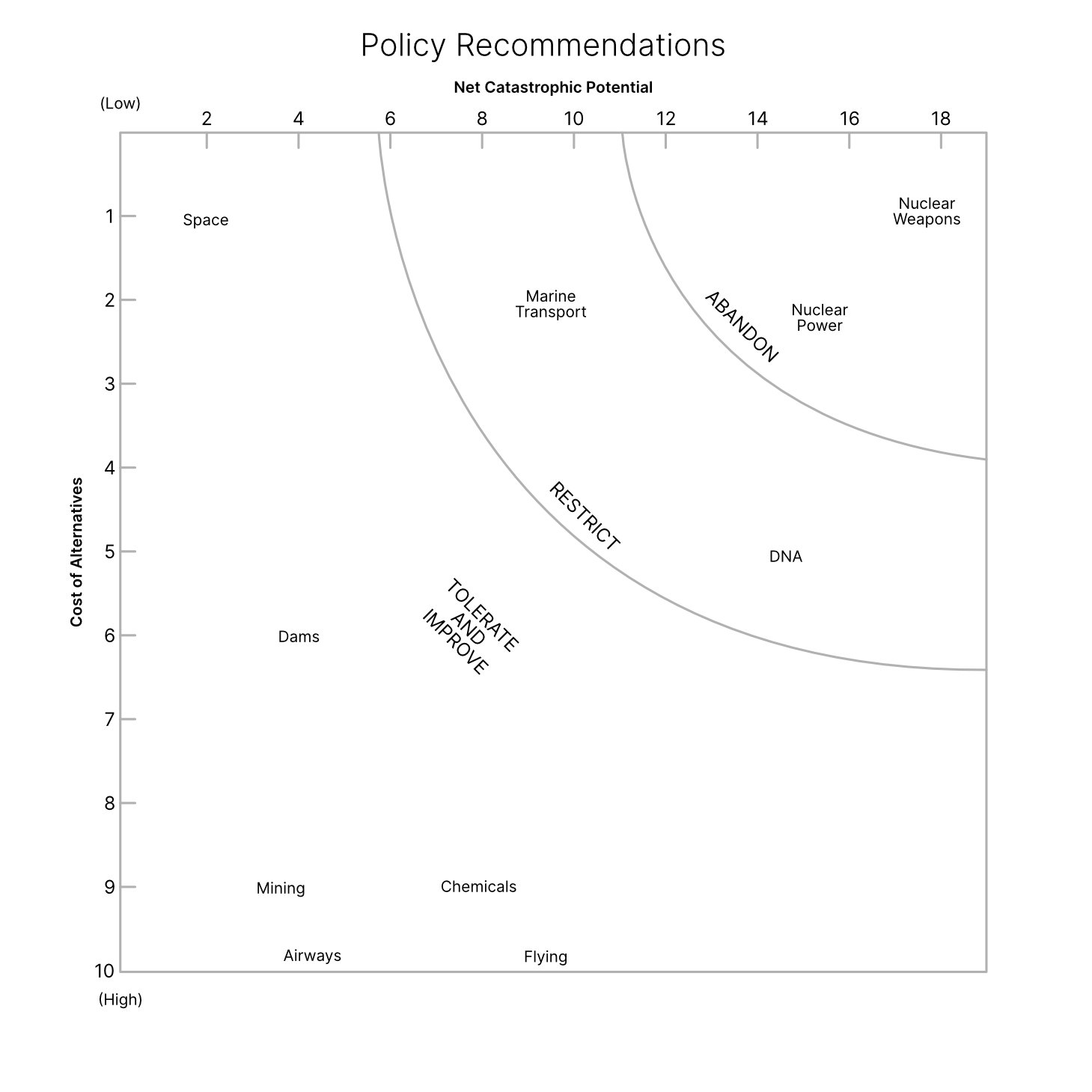

His conclusion with risky tech is to abandon, restrict, or tolerate and improve after basing on the cost of alternatives and net catastophic potential. Personally, I disagree with his stance on abandoning nuclear power, though I absolutely agree that serious consideration to balancing of redundant systems need to be taken into the highest regard. With the environmental changes and increased need for energy, nuclear provides clean and consistent energy.

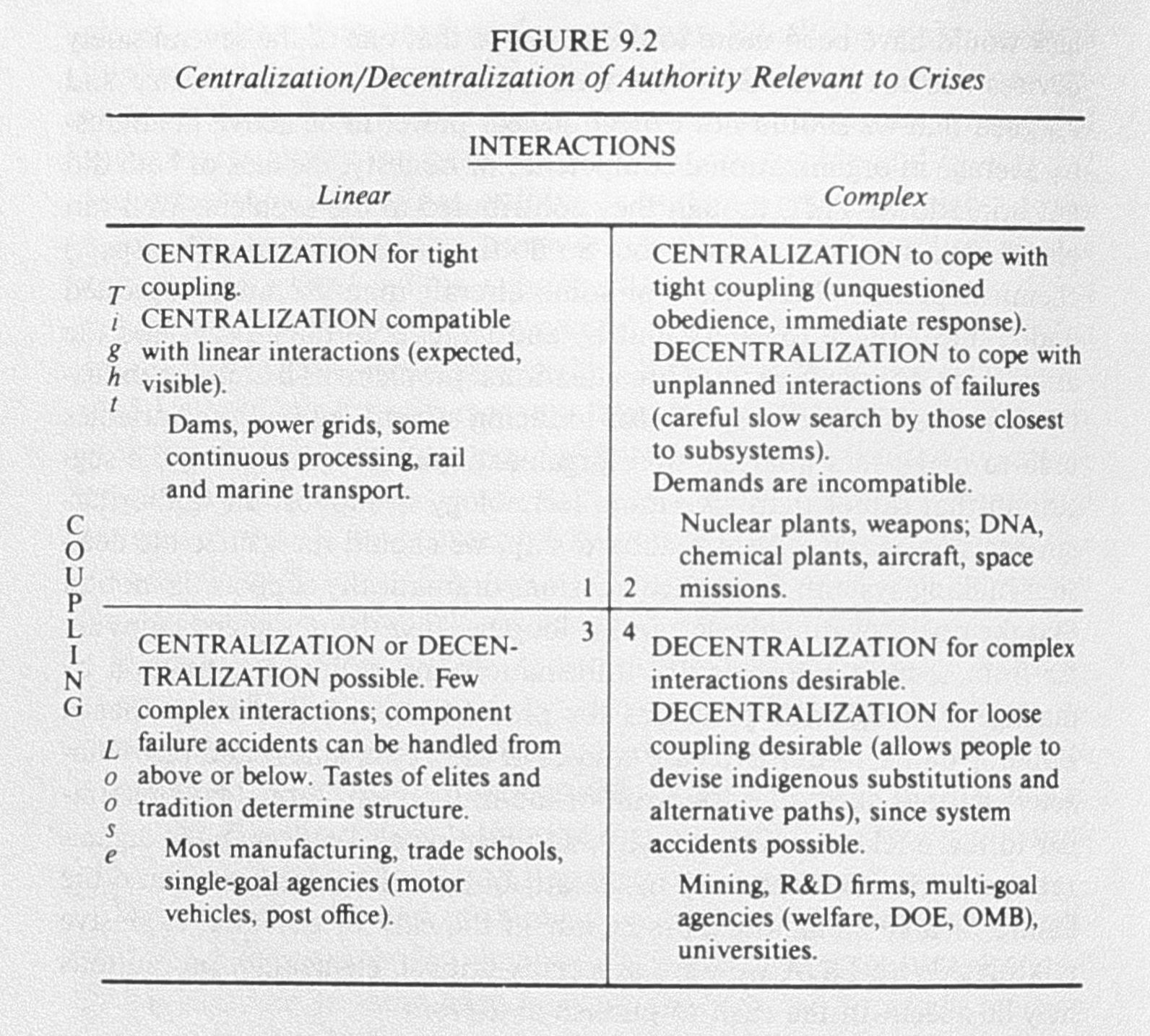

He has also recommended levels of centralization/decentralization of authority considering the type of coupling and complexity that should be adapted by various entities or industries.

He has also recommended levels of centralization/decentralization of authority considering the type of coupling and complexity that should be adapted by various entities or industries.

His book was published two years before the space shuttle Challenger disaster.

Future covers adopted this photo, taken 73 seconds after launch.

Political and bureaucratic pressure to ignore engineers calling for a delay resulted in the loss of seven astronauts. This was an example of a tightly coupled system with little flexibility when it came to even a single intital component failiure.

back row: Ellison Onizuka, Sharon McAuliffe, Gregory Jarvis, Judith Resnik

front row: Michael Smith, Francis "Dick" Scobee, Ronald McNair

What I take from this all:

Redundancies made in the name of safety can sometimes cause more of a hazard or reduce reliability.

Next time you hear of a catastrophic failure that is reported to be the sole fault of one worker, be very skeptical.

If you want to know more about "errors" on an individual scale, I would recommend Don Norman's The Design of Everyday Things. I will probably do another summary like this in the future on that book.